Innovating Together: Challenges and Solutions for Integrating XR in Different Fields

April 2nd, 2024

On March 21st, we marked the fourth milestone of the TETRA ExperienceTwin project, focusing on enriching XR experiences through shared research insights. The day featured an interactive workshop addressing the integration challenges of XR solutions across different industries. It concluded with Klaas Bombeke's engaging presentation on leveraging XR data to enhance user experiences.

Jonas De Bruyne started our day with his insights on the nuances of using (delayed) signalling to aid procedural learning during technical VR training. His main conclusions were that presenting trainees with delayed signalling might have a positive effect on their VR experience and learning outcomes. This might be facilitated by: (1) the increased feeling of flow while doing a VR training with delayed nudges and (2) the decreased self-reported cognitive load after a VR training with delayed nudges. Furthermore, Jonas presented new XR data analytics that are currently under investigation to find out whether they can provide objective insights into experience and performance.

While these findings are preliminary, they open up exciting possibilities for the future of VR training. A comprehensive report detailing the advantages of delayed nudges in VR education is eagerly awaited at the project's conclusion.

Next, Jamil Joundi delved into the nuances of user experiences when interacting with a vaccination robot, highlighting the contrast between real-life prototype testing and virtual reality (VR) simulations. He first explained the process of creating a VR environment that not only features animations of the robot but also facilitates seamless communication between the robot's movements in real life and the virtual environment. Additionally, Jamil illustrated how the current experimental setup allowed for the simultaneous collection of a wide range of behavioural and physiological data.

Jamil’s findings revealed an interesting observation: while the VR prototype scored marginally higher in overall usability, participants tended to favour the real-life prototype for its ease of use. This suggests a complex relationship between user experience and the medium of interaction.

Stay tuned for the results of our further analyses, particularly focusing on heart rate variability, eye gaze, and electrodermal activity data collected during these tests.

Next, Aleksandra Zheleva illustrated the role of electrodermal activity (EDA) features in enhancing VR experiences through feedback loops. She shed light on the diverse physiological responses individuals have to stress in VR environments; from significant changes to minimal alterations in electrodermal activity. This variation underscores the necessity for VR experiences tailored to each individual. Aleksandra suggested that real-time detection of specific EDA features, like changes in skin conductance response (SCR) amplitude, could enable the dynamic adjustment of VR settings through sophisticated algorithms. The implications of such adaptive feedback mechanisms are set to be a key topic in our next project meeting.

However, the success of these feedback loops hinges on the precision of the EDA signals collected. Thus, we've focused on optimizing the placement of EDA sensors within VR equipment. To this point, Rune Vandromme introduced two innovative prototypes that integrate the EmotiBit sensor box into an HTC Focus 3 controller. The prototypes were designed to be both functional and ergonomic while ensuring stable EDA signal capture. Interestingly, user feedback highlighted a preference for one prototype over the other based on hand size, pointing to ergonomics as a critical factor for our future testing endeavours.

Then, Sam Van de Walle gave us a snapshot of the progress he has made on a video highlight annotation tool in VR for medical training. He started with an overview of our Docker container setup, laying the foundation for a robust and scalable application architecture. A major highlight that was presented was the introduction of an intuitive video playback interface. Features like play/pause functionality, a progress bar, time skip options, and frame-based navigation will not only enhance the teacher’s experience but also provide precise control over video playback. The timeline and time-based logs bring a new dimension to video analysis, allowing teachers to pinpoint moments of interest efficiently. What is more, with annotation pins that are colour-coded and an easy-to-navigate video name display, teachers can mark important segments effortlessly.

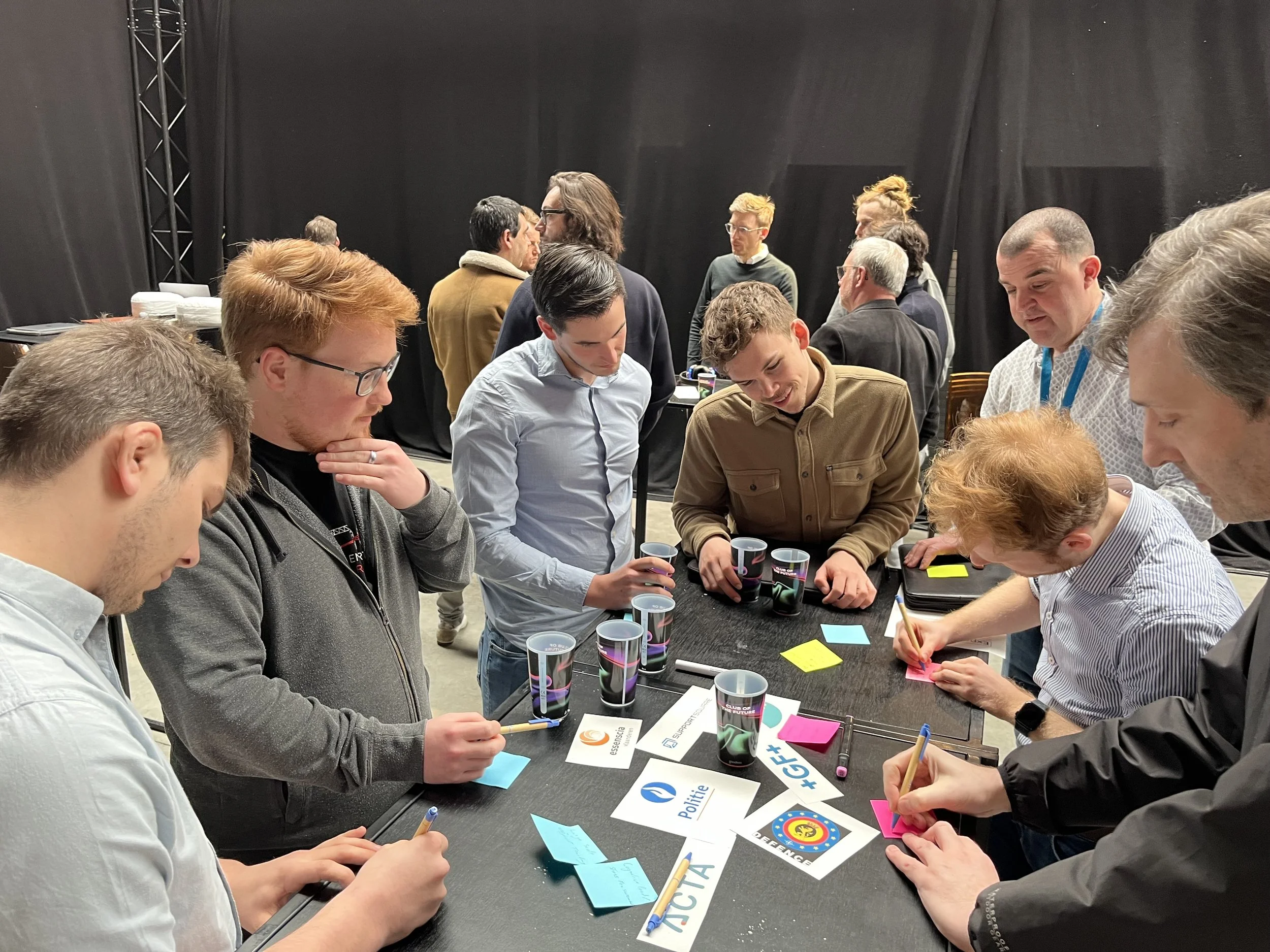

The second part of the day was marked by an interactive workshop in which four teams discussed the challenges they are currently facing in incorporating XR solutions in their various domains. Here is a sneak peek of our main findings.

One of the main topics that emerged was the adoption of VR among trainees. VR’s capability to connect trainers and trainees from different locations was pointed out as a feature that is likely to win trainees over by allowing them to participate in training from the comfort of their own home thus negating travelling costs. However, this also raised a question about the cost implications of HMDs for each trainee. Alongside cost, trust in technology also emerged as a significant concern, particularly regarding data privacy and safety.

In terms of content, optimizing cognitive load without over-relying on text and minimizing the use of external sensors to avoid user resistance was raised as a concern. Indeed, a number of participants pointed out the importance of keeping training scenarios as realistic as possible, using audio, pictograms, and images for simplicity, and leveraging existing controls and eye-tracking features within HMDs to enhance the learning experience.

The inherent eye tracking capabilities of most of the widely available HMDs (alongside the incorporation of voice commands) was also pointed out as an effective and easy to use tool for 3D annotation of videos. This was pinpointed as a significant feature that could simplify the training process for the teachers.

To wrap up our insightful day, Klaas Bombeke delivered a compelling presentation on the potential of VR to capture and analyze user behaviour and learning data. He delved into the ways this data can be leveraged to refine and enhance the learning experience within VR training environments. The talk was also a part of imec-mict-UGent’s dynamic participation in FTI Week 2024. Read up on the rest of our contributions to FTI here.

Extra material: the workshop inspired us to create a virtual testing environment to test instructional design principles on usability, user experience and effectiveness. Master’s student Amber Aspeslagh built and used the testing environment in the context of her research internship and Master’s thesis. The report can be found here.